Claim: An Indian news broadcast praised Pakistan.

Fact: The claim is false. The audio is likely generated through AI voice synthesis and overlaid on a real news broadcast. The headlines in the footage do not refer to Pakistan.

A clip of an Indian news broadcast has been shared online, containing the following text:

پاکستان پوری دنیا پر چھا گیا بھارتی میڈیا

[Translation: Pakistan is taking over the whole world – Indian media]

In the clip, the anchor speaks about Pakistan’s recent rise in global influence. She notes that Pakistan, once dismissed or underestimated by India, is now making significant strides in areas like digital technology and modern defense.

The anchor also mentions a recent international summit where Pakistan’s presentation was powerful enough to leave the audience silent, surpassing India’s influence. She adds that there is an internal debate in India, among the likes of media, experts, and government officials, about India losing its leadership edge on the world stage. The anchor concludes with the assertion that “the game has changed”, and Pakistan is no longer a minor regional player but a serious global contender.

Fact or Fiction?

Soch Fact Check first reverse-searched keyframes from the viral video to trace its original source. However, we could not find any broadcast that matched the viral clip.

However, a key discrepancy was noted at the very start of the video; the anchor’s lip movements were not in sync with the audio, suggesting that she was actually saying something else.

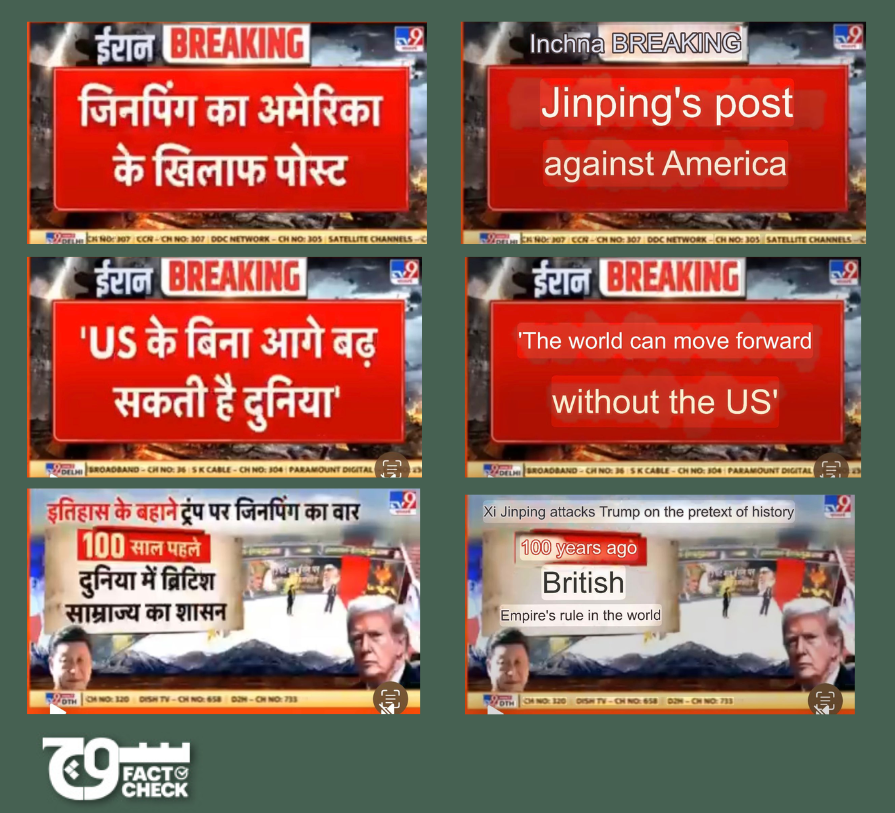

Crucially, the Hindi text in the clip does not mention Pakistan. Using Google Lens to translate, we found that the headlines reference Xi Jinping, Donald Trump, and the US.

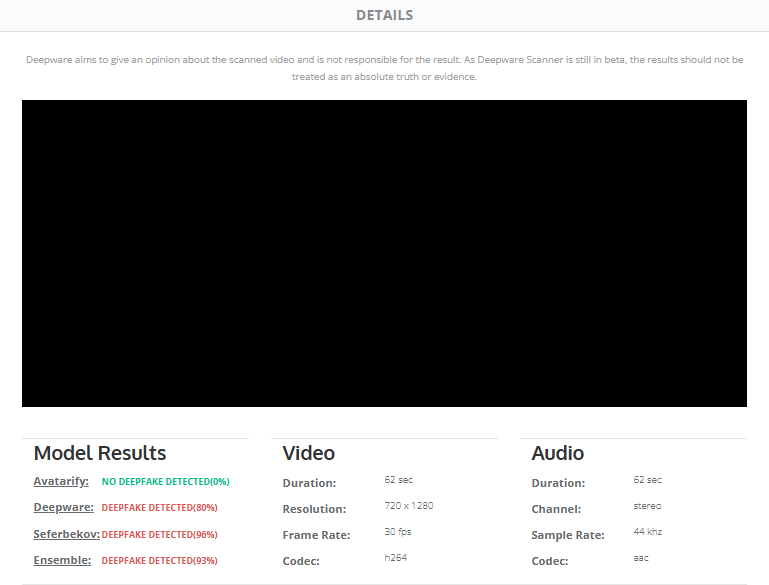

Suspecting that the video is altered, we ran it through Deepware Scanner, an AI-based tool used to detect manipulated or synthetic media and particularly deepfakes. It uses multiple detection models to analyse visual and audio cues that may indicate tampering. The results were as follows:

Out of the four models, three indicated a high likelihood of tampering. The deepware model, which analyses facial inconsistencies, unnatural blinking, distortions, and audio-visual mismatches, gave an 80% rating that the video is a deepfake. The Seferbekov model examines frame-level anomalies, especially in facial texture, lighting, and blending artifacts. This model rated the clip 96% strongly indicating the video is a deepfake.

Lastly, Ensemble combines the outputs of multiple models including the above two to generate a more balanced and robust prediction. The idea is to reduce false positives and increase overall reliability. It gave a 93% detection confidence, reinforcing deepfake classification.

We also scanned the audio using Resemble Detect, a tool designed for real-time detection of deepfake audio across various media types, which rated the audio as “fake.”

Shaur Azher, an audio engineer, at our sister company Soch Videos analysed the audio of the broadcast clip. He observed the following signs of artificiality:

- Synthetic frequencies: The audio spectrogram reveals the presence of synthetic or digitally generated frequencies throughout the recording. These frequencies do not correspond with natural vocal harmonics or environmental sound patterns.

- Temporal irregularities: There are noticeable pauses and large temporal gaps between individual words, indicating potential digital manipulation, automated speech generation, or extensive post-production editing.

- Equalisation profile: The frequency response exhibits a generic equalisation curve, with elevated low-frequency energy and a low-pass filter applied at approximately 15 kilohertz (kHz). This suggests artificial enhancement or compression, inconsistent with raw broadcast recordings.

- Absence of environmental acoustics: The recording contains no audible breathing sounds, ambient noise, or room tone. Such absence is atypical for live or field recordings and is consistent with synthetic or processed voice generation.

- Vocal characteristics: The speech demonstrates mono-tonal delivery lacking natural pitch variation and human articulation nuances, reinforcing the likelihood of non-organic voice synthesis or heavy audio reconstruction.

“The findings collectively suggest that the recording is not from a natural live broadcast,” Azher concluded. He added that “the video has likely undergone digital manipulation or voice synthesis generation.”

Based on deepfake detection results, Azher’s analysis, and the clear mismatch between the audio and visuals, Soch Fact Check concludes that an artificially generated audio was likely overlaid onto footage from a broadcast.

Virality

The video was shared here, here, and here on Facebook. Archived here, here, and here.

On TikTok, it was shared here, here, here, and here. Archived here, here, here, and here.

Conclusion: The audio in the viral video is artificial and was likely overlaid onto real footage. Additionally, the headlines in the broadcast make no reference to Pakistan.

–

Background image in cover photo: Facebook

To appeal against our fact-check, please send an email to appeals@sochfactcheck.com