Claim: Pakistan Army spokesperson Lt Gen Ahmed Sharif Chaudhry spoke favourably about Imran Khan and asked for his forgiveness in a video interview, while criticising the military chief, Field Marshal Syed Asim Munir.

Fact: The video has been digitally altered, likely using AI. The footage used for the manipulated clip is originally from a BBC Urdu interview, in which Chaudhry did not say anything of the sort.

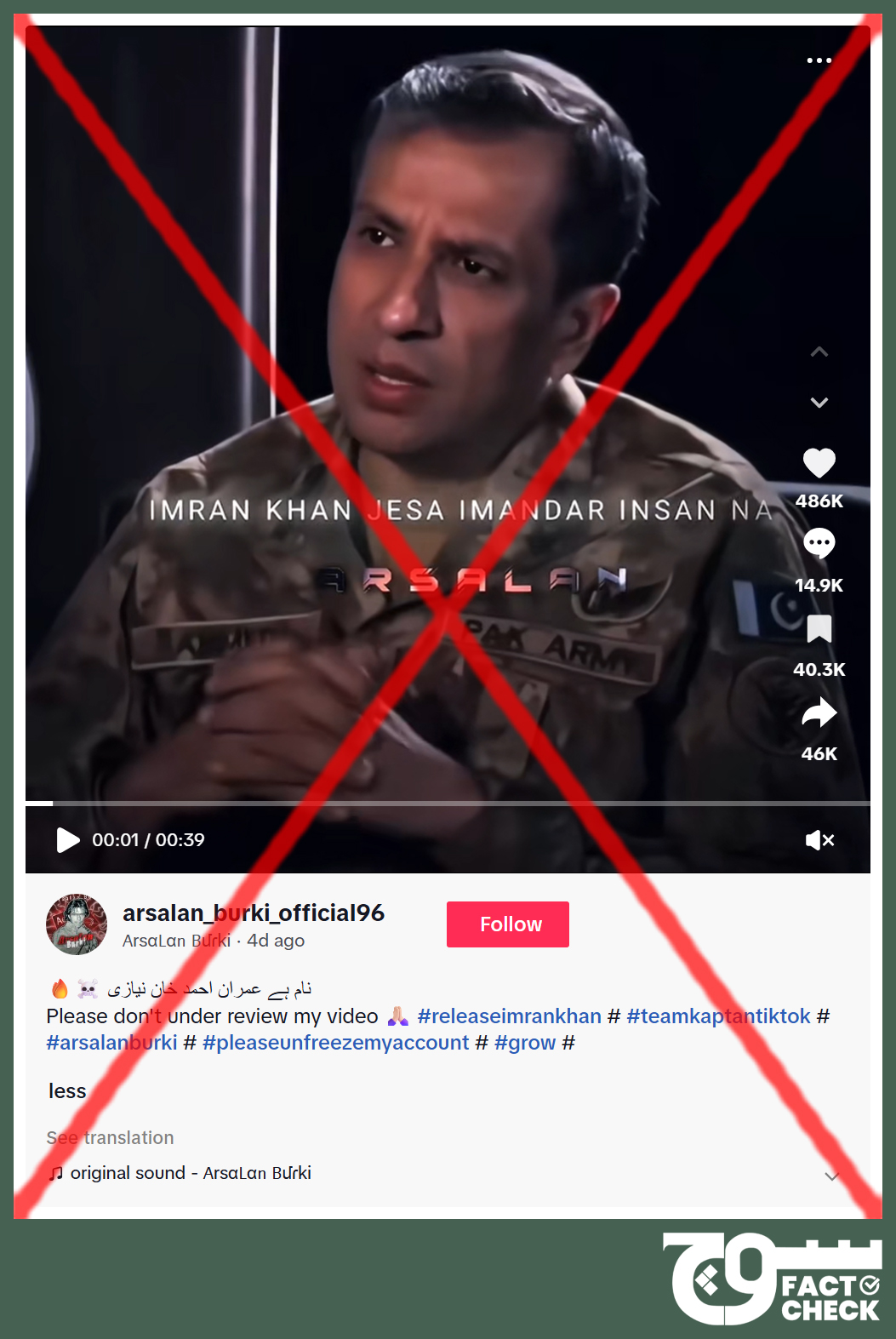

On 12 October 2025, TikTok user @arsalan_burki_official96 posted (archive) a video showing Lt Gen Ahmed Sharif Chaudhry, Pakistan Army’s spokesperson and the director-general of its media wing, the Inter-Services Public Relations (ISPR), allegedly speaking in favour of incarcerated former Prime Minister and founder of the Pakistan Tehreek-e-Insaf (PTI), Imran Khan.

The 39-second clip also shows Chaudhry allegedly criticising Field Marshal Asim Munir and asking for Khan’s forgiveness. His purported remarks in the video are as follows:

“عمران خان جیسا ایماندار انسان نہ کبھی پاکستان کی سیاست میں پیدا ہوا نہ ہوگا۔ ان کی شخصیت میں ایسی کشش ہے کہ پیسوں کی کوئی لالچ نہیں ہے۔ ہزاروں فیلڈ مارشل عمران خان کی جوتی پر قربان۔ آصف منیر صاحب کی اتنی اوقات ہی نہیں کہ عمران خان جیسے لیجنڈ سے ان کا موازنہ کیا جائے۔ ہم عمران خان سے درخواست کرتے ہیں کہ ہمیں معاف کردیا جائے۔ ہم پر رحم کیا جائے۔

[An honest person like Imran Khan has never been born in Pakistan’s politics and never will be. There is such a charm in his personality that he has no greed for money. May thousands of Field Marshals be sacrificed upon Imran Khan’s shoe. Asim Munir is not even worthy enough to be compared to a legend like Imran Khan. We request Imran Khan to forgive us. Please show mercy on us.]”

The TikTok video is accompanied by the following caption, which also includes the hashtags “#releaseimrankhan”, “#teamkaptantiktok”, and “#arsalanburki”:

“🔥☠️ نام ہے عمران احمد خان نیازی Please don’t under review my video 🙏🏻 عمران احمد خان نیازی: تیم کپتان کی آواز

[The name is Imran Ahmed Khan Niazi ☠️ 🔥 Please don’t under review my video 🙏🏻 Imran Ahmed Khan Niazi: The voice of Team Kaptan]”

We also found a disclaimer under the caption of the aforementioned video that states: “This information is Al generated and may return results that are not relevant. It does not represent TikTok’s views or advice. If you have concerns, please report at: Feedback and help – TikTok.”

The disclaimer is visible only under posts’ captions and appears to be different from the “AI-generated” label that users can add or TikTok itself adds when it identifies such content. It is unclear whether it is a new feature launched or being rolled out and tested by the platform as we did not come across any relevant official press releases.

The video by @arsalan_burki_official96 features loud music clashing with the sound of Chaudhry’s voice; however, we were able to find a cleaner version, with no sound in the background, posted (archive) on X (formerly Twitter) on 14 October.

The aforementioned X post is captioned as follows:

“اب خوش ہو انصافین 🙈😁😂 سچ بولنے لگ گیا ڈی جے والا بابو یہ ویڈیو AI سے بنائی گئی ہے، اور انشاءاللہ بہت جلد پوری دنیا یہ حقیقت میں ہوتا ہوا دیکھے گی، ہم سب یہ الفاظ اپنے کانوں سے سنے گے! انشاءاللہ وقت قریب ہے!

[Now be happy, Insafian 🙈😁😂 DJ wala babu has started speaking the truth. This video is made with AI and, God willing, the whole world will see this happening in reality very soon, we will all hear these words with our own ears! God willing, the time is near!]”

Fact or Fiction?

Soch Fact Check reverse-searched keyframes from the viral video using Yandex, which led us to two articles: one on MSN sourced from Daily Times and another, though unrelated, on BBC Bengali.

We then searched Google using the phrase “Pakistan Army spokesperson Lt Gen Ahmed Sharif Chaudhry” translated to Bengali — “পাকিস্তান সেনাবাহিনীর মুখপাত্র লেফটেন্যান্ট জেনারেল আহমেদ শরীফ চৌধুরী” — and found a similar image of the military official in a 23 May 2025 article on BBC Bengali.

The Daily Times article, published on 28 June 2025, notes that Chaudhry spoke to BBC Urdu.

Using this information, we found two interviews of the military spokesperson on BBC Urdu’s YouTube channel. One of them was uploaded on 21 May 2025 and the other on 27 June 2025; yet both appear to have been filmed on the same date, considering the same camera angles and journalist Farhat Javed Rabani’s attire.

However, it is the 27 June video — titled “Army Denies Backchannel Talks with Imran Khan, Says Politics Is for Politicians: DG ISPR – BBC URDU” — that we suspect was manipulated to create the viral video, considering Chaudhry’s matching hand movements.

In neither of the two interviews does the army spokesperson speak favourably about ex-PM Khan, criticise Field Marshal Munir or ask for the PTI founder’s forgiveness.

Deepfake detectors

The first tool used to test the video in the claim was DeepFake-O-Meter, developed by the University at Buffalo’s Media Forensics Lab (UB MDFL). We used seven of the available detectors — AVSRDD, AltFreezing, CFM, LIPINC, LSDA, WAV2LIP-STA, and XCLIP — that concluded the probabilities of the video being generated using artificial intelligence (AI) are 100.0%, 36.5%, 46.8%, 94.5%, 51.5%, 52.9%, and 99.1%, respectively.

In the Hiya Deepfake Voice Detector, we uploaded both versions of the video. The tool analysed portions of the clip with music at different points, giving a result of 66, 64, and 59 out of 100 and labelling it “uncertain.” The cleaner one yielded 1, 1, and 2 out of 100, turning out to be “likely a deepfake”.

However, we then used the InVID-WeVerify verification tool, which also uses Hiya, for a deeper analysis. The result for the first version with music was “inconclusive” as there was “excessive silence or excessive background noise present in the audio file”. For the second one, it said the sample was “very likely AI-generated”.

Hive Moderation said the probabilities of the two samples — with and without music — being AI-generated were 0.7% and 0.3%, respectively.

Similarly, Zhuque AI Detection Assistant yielded respective probabilities of 2.87% and 20.16%.

Deepware Scanner, however, there was “no deepfake detected” in either of the two versions of the viral video.

Soch Fact Check also tested the video with background music in the Global Online Deepfake Detection System (GODDS), a tool developed by Northwestern University’s Security & AI Lab (NSAIL). It uses a combination of various models along with human analysis to provide a holistic summary of the results.

The GODDS analysts said they “believe this video is likely generated via artificial intelligence”. When we provided them the link to Chaudhry’s interview to BBC Urdu, they confirmed “it may have been used to create the manipulated video”.

GODDS analysis explained

GODDS used 21 deepfake detection algorithms for the visual content and 20 for the audio component. Two trained analysts also examined the clip.

All predictive models for the visual and audio content said the video “is likely to be fake”:

- The video is likely to be fake with a probability above 0.5, according to 3 of the 15 predictive models; it is likely to be fake with a probability below 0.5, according to the 18 other predictive models.

- The audio is likely to be fake with a probability above 0.5, according to 5 of the 20 predictive models; it is likely to be fake with a probability below 0.5, according to the 15 remaining predictive models.

According to the human analysts, in the frames between the 0:01 and 0:16 marks, “the lower teeth display noticeable changes in alignment and shape, suggesting inconsistencies incompatible with natural anatomical stability”.

They added that the little finger visible at the 0:03 mark “exhibits unnatural curvature and disproportionate articulation relative to adjacent fingers, indicative of possible generative artefacts”. Moreover, at the 0:18 mark, “the formation of the teeth appears irregular and lacks realistic boundary definition”.

Sound engineer’s analysis

Soch Fact Check also sought a comment from Shaur Azher, who teaches sound design and recording at the University of Karachi and the Shaheed Zulfikar Ali Bhutto Institute of Science and Technology (SZABIST). He also works as an audio engineer at our sister organisation, Soch Videos, and specialises in mixing and mastering audio.

Azher said the recording contains several signs of deliberate digital modification such as “synthetic frequencies, splice edits, an absence of natural room acoustics, and artificial dynamic processing”, all of which “collectively confirm that the recording is not an authentic or continuous live capture”.

He provided the following observations to support his findings:

- Synthetic or static frequencies: The spectrographic examination reveals the presence of static and synthetic frequencies, which are inconsistent with natural human speech. These anomalies typically indicate artificial audio generation or digital interference.

- Splice gaps and editing artefacts: Distinct discontinuities and splice gaps are visible within the waveform, demonstrating that the audio has been edited or segmented. Such artefacts are not present in continuous, unaltered recordings.

- Absence of room tone and reverberation: The recording exhibits a complete lack of ambient background tone or room reverberation, both of which are characteristic of genuine environments. Their absence strongly suggests that the audio was recorded or processed under artificial conditions.

- Dynamic processing (upward compression): Analysis indicates the application of upward compression, a signal-processing technique used to elevate lower-level audio elements. This type of dynamic manipulation is not standard in raw or field recordings.

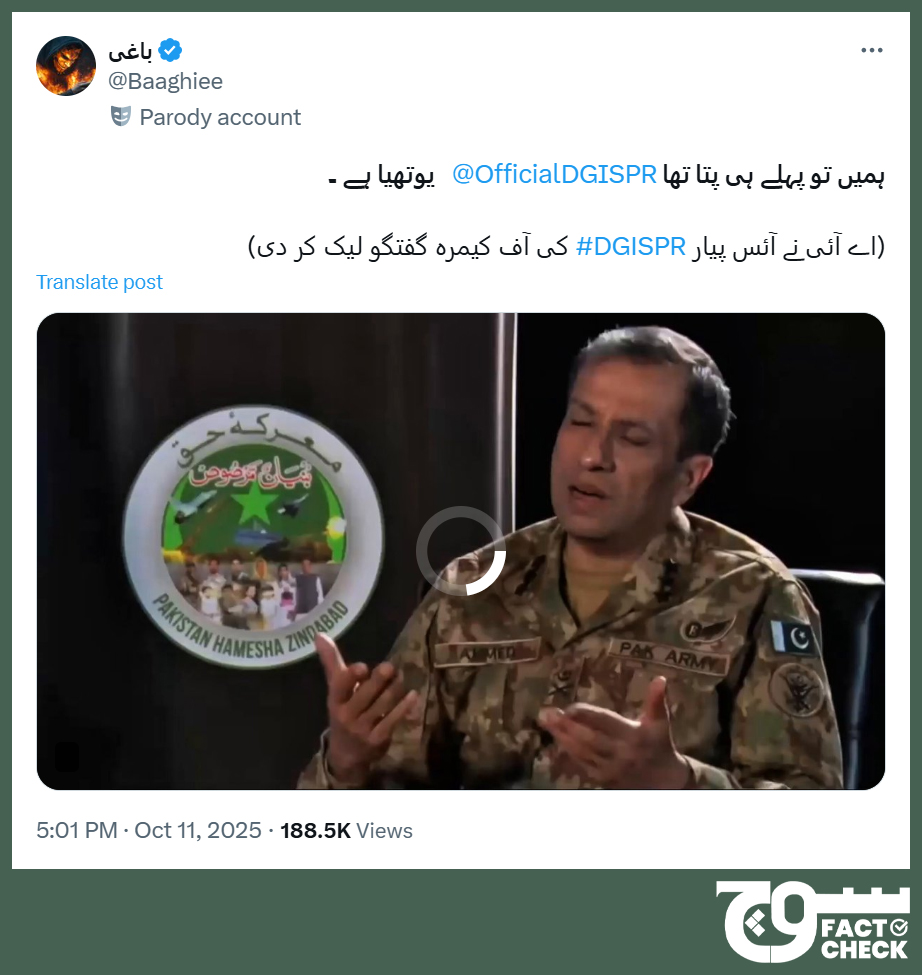

Lastly, we found a better-quality version of the video, without any background music, posted (archive) on 11 October 2025 by X user @Baaghiee. We believe that this may potentially be the first time the clip was uploaded online.

@Baaghiee (archive) is marked as a “parody account” — a change introduced by X in January 2025 “to increase transparency and to ensure that users are not deceived” — and their bio states that they post “Parody, AI , memes & satire”.

The X post by @Baaghiee includes the following caption:

“ہمیں تو پہلے ہی پتا تھا @OfficialDGISPR یوتھیا ہے ۔ (اے آئی نے آئس پیار #DGISPR کی آف کیمرہ گفتگو لیک کر دی)

[We already knew @OfficialDGISPR was a Youthiya. (AI leaked Ice-Pyaar #DGISPR’s off-camera conversation)]”

“Youthiya” — a portmanteau combining the words “youth” with an abusive term in colloquial Urdu — is a derogatory manner of referring to PTI supporters. “Ice-Pyaar” is a play on the name of the military’s media wing, the ISPR.

Soch Fact Check, therefore, concludes that the video in question was digitally altered, likely using AI tools.

Virality

We found the clip posted here, here, here, here, and here on TikTok, here on Facebook, here and here on Instagram, and here and here on YouTube.

The video was also shared on X here, here, and here. The original parody post by @Baaghiee has been viewed over 188,100 times so far.

Conclusion: The video has been digitally altered, likely using AI. The footage used for the manipulated clip is originally from a BBC Urdu interview, in which Chaudhry did not say anything of the sort.

Background image in cover photo: Wikimedia Commons

To appeal against our fact-check, please send an email to appeals@sochfactcheck.com