Claim: Iran’s Ministry of Foreign Affairs praised Pakistan for calling for stability and condemning foreign interference in Iran during the recent protests.

Fact: The viral clip is manipulated. Baghaei did not mention Pakistan at any point in the original speech. Additionally, multiple AI-based deepfake detection tools and audio-visual forensic analysis found strong indicators that the clip was AI-generated or digitally altered.

Facebook account Pakistan Strategic Forum shared a video with the following caption: “Iran Remembers 🇮🇷 🤝🇵🇰

“Our brotherly neighbour Pakistan stood with us consistently.” – Iran’s Ministry of Foreign Affairs”

In the video, the Iranian official says:

“During the 2025 Zionist aggression on our soil, certain regional partners despite close historical and multilateral bonds, chose silence or stepped back from clear condemnation bound by deepening ties to the aggressor. We remember those deepening choices. Now in these foreign-backed unrests, the pattern repeats. Trusted partners hesitate or distance themselves while our brotherly neighbour Pakistan consistently calls for Iran’s stability and condemns external meddling. Iran never forgets true solidarity in tough times.”

Protests in Iran

Protests in Iran began on 28 December 2025 when shopkeepers in Tehran’s Grand Bazaar went on strike after the Iranian rial hit a record low against the US dollar. The unrest quickly spread from Tehran to cities and towns across the country, evolving into street protests with political chants, as demonstrations reached multiple provinces nationwide.

Authorities acknowledged the protests and said they would listen with patience, but “security forces responded with lethal force to disperse protesters, unlawfully using force, firearms and other prohibited weapons, as well as conducting sweeping mass arrests,” reported Amnesty International.

On 18 January, Supreme Leader Ayatollah Ali Khamenei publicly acknowledged for the first time that thousands had been killed, blaming the US for the deaths. According to Amnesty International, large-scale protests no longer appear to be taking place amid the heavy security presence, and ongoing internet restrictions have made it difficult to obtain clear information, even as protesters’ grievances remain unresolved.

Fact or Fiction?

Soch Fact Check reverse-searched keyframes of the viral video and traced it to the original version shared by an Indonesian broadcast outlet Metro TV on 13 January 2026, titled: “Iran Foreign Ministry Official Condemns U.S. and Israeli Statements Amid Unrest.” One thing that immediately stood out was that Baghaei spoke in Persian in the original footage while the video in the claim shows him speaking in English. This was the first indication that the latter is doctored.

According to the video’s description, “Iran’s Foreign Ministry spokesperson Esmaeil Baghaei held a press conference in Tehran addressing the ongoing nationwide unrest and foreign involvement. Baghaei accused statements from U.S. and Israeli officials of fueling violence and chaos inside Iran, framing them as interventionist and aimed at destabilizing the country. He said diplomatic communication with the United States remains open through official channels, but Iran will defend its sovereignty and is prepared to counter any aggression. The remarks come amid widespread protests, a government‑imposed internet blackout, and heightened tension with Washington over possible intervention.”

Soch Fact Check contacted Farhad Souzanchi, editor-in-chief of Factnameh, a Toronto-based Persian-language fact-checking outlet, to help with the translation of the original footage. Souzanchi confirmed that the Iranian official did not mention Pakistan at any point during his actual speech.

Baghaei’s presser was covered by multiple news outlets such as Rudaw.net, Tesaa World and bne IntelliNews. However, none of the reports mentioned the statement attributed to the Iranian official in the viral posts, nor did they include any other comments about Pakistan.

Soch Fact Check also searched whether any broadcasters had shared an English-dubbed version of Baghaei’s speech. However, we did not find any credible results.

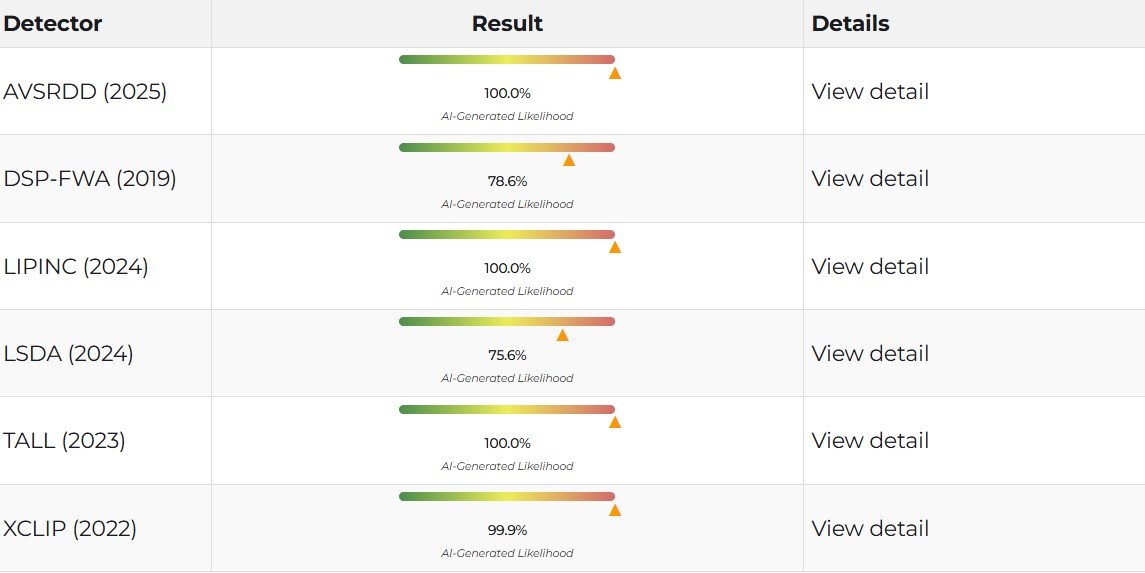

Suspecting that the version in the claim is manipulated, Soch Fact Check examined the video and ran it through DeepFake-O-Meter, which analysed it using multiple AI-based detection models. The results for the video are as follows:

DeepFake-O-Meter results

We first used the AVSRDD (2025) model, which is an AVSR-based audio and visual deepfake detection method that leverages speech correlation. The model uses dual-branch encoders for audio and video to support independent detection of each modality. It rated the video with a 100% likelihood of being fake.

Next, the DSP-FWA (2019) model was used. It focuses on detecting deepfakes by identifying face- warping artifacts introduced during the deepfake generation process. It rated the video’s probability of being a fake at 78.6%. This model uses digital signal processing (DSP) techniques to spot inconsistencies caused when synthesised faces are resized and blended into original images or videos.

The LIPINC (2024) model is a deep- learning based method to detect lip-syncing deepfakes by identifying the spatial-temporal discrepancies in the mouth region of deepfake videos. This model rated the video 100% fake.

The video was also analysed using LSDA (2024), a deepfake-detection model designed to assess whether a video or image is fully or partially synthesised using AI. The model evaluates visual and temporal cues, such as facial movements, lip synchronisation, and texture consistency, to estimate the likelihood of synthetic manipulation. It rated the clip’s probability of being fake at 75.6%.

We also used the TALL (2023) model as it focuses on checking online videos, which are often compressed or altered in ways that hide fine details. By testing whether the video remains coherent after details are removed, TALL helps reveal manipulation that may not be obvious to the eye. It rated the probability of the video being fake at 100%.

Lastly, we used the XCLIP (2022) model, which rated the likelihood of the video being fake at 99.9%. This model uses cross-frame attention to analyse how frames relate to each other over time. This makes it good at spotting inconsistencies in facial movements, expressions, and temporal flow, which are common signs of deepfakes.

Audio forensic analysis:

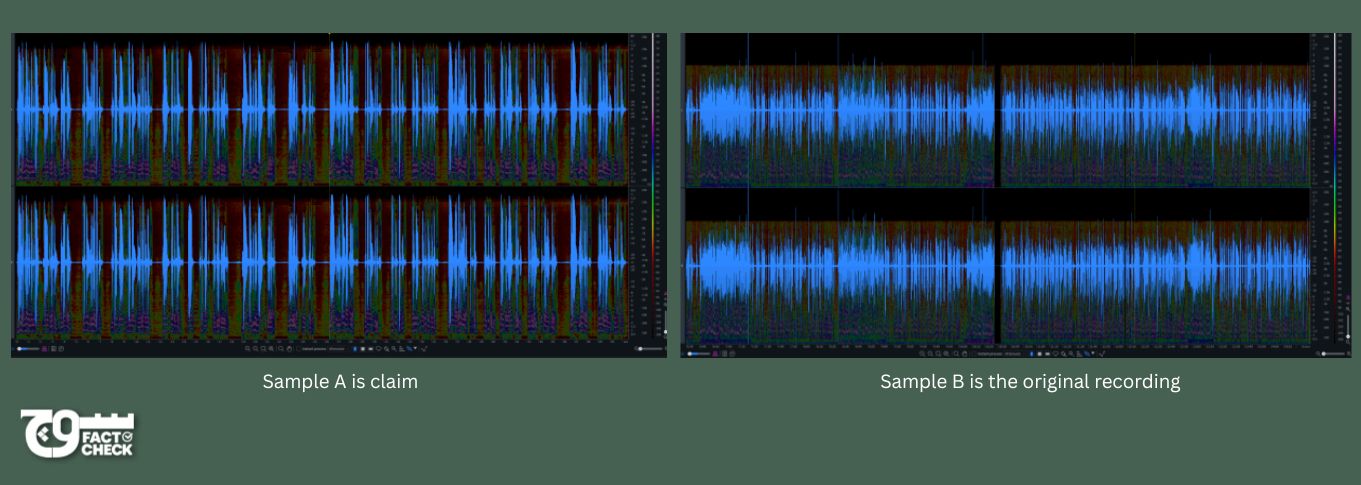

Shaur Azher, an audio engineer, at our sister company Soch Videos, analysed the viral clip against an extended, original footage of Baghaei. He conducted the cross comparison analysis between sample A (claim) and B (original). Azher’s comparison is based on technicality, not on verbal or context given on the speech.

Spectrograms of the viral clip and original recording

Dynamic range and leveling

Sample A:

- Exhibits uniform and compressed dynamics

- Consistent vocal leveling throughout

- Peak levels observed at approximately 0.1 dB

- Absence of natural volume fluctuation

- Suggests post-production normalization and compression

Sample B:

- Displays irregular and natural dynamics

- Presence of volume variations

- Natural clipping observed in high-energy speech segments

- Plosive sounds (“P”, “B”, “T”) clearly audible

Frequency spectrum analysis

Sample A:

- Frequency range: 20 Hz – 15,000 Hz

- Extended high-frequency content

- Indicates digital enhancement or reconstruction

- Typical of AI-generated or heavily processed speech

Sample B:

- Frequency range: 20 Hz – 10,000 Hz

- Natural roll-off in higher frequencies

- Consistent with broadcast microphones and live recording environments

Noise floor analysis

Both samples exhibit:

- Noise floor: Approximately -44 dB

This suggests that the base environmental noise remains similar.

However, in Sample A, noise appears digitally stabilized, whereas in Sample B, it fluctuates naturally with speech.

Audio processing artifacts

Sample A:

- Absence of breathing sounds

- Reduced mouth noise

- Smooth transitions between phonemes

- Lack of micro-dynamic variations

- Presence of synthetic smoothness

Sample B:

- Audible breathing

- Natural mouth and tongue movement sounds

- Minor inconsistencies

- Environmental reflections

- Realistic microphone interaction

Visual and behavioural analysis

Facial and lip movement synchronisation

Sample A:

- Lip movements appear calculated and uniform

- Limited spontaneous facial muscle activity

- Reduced micro-expressions

- Timing appears optimised for dubbed speech

Sample B:

- Organic facial movement

- Spontaneous blinking and eye movements

- Natural head movements

- Consistent synchronisation with original speech patterns

Head and eye movement analysis

Sample A:

- Restricted head movement

- Controlled posture

- Reduced natural variability

- Limited eye-ball movement and blinking

Sample B:

- Natural head turns

- Responsive eye movements

- Real-time engagement with audience

- Authentic public-speaking behaviour

Vocal delivery assessment

Sample A

- Highly monotone delivery

- Limited emotional variation

- Robotic speech pattern

- Uniform pacing

- Absence of stress or emphasis markers

These characteristics are commonly associated with:

- AI-generated voice models

- Advanced voice synthesis

- Heavy post-production voice processing

Sample B

- Natural intonation

- Emotional modulation

- Variable pacing

- Realistic emphasis patterns

- Human speech behaviour

Based on comprehensive audio and visual forensic examination, Azher drew the following conclusions:

1. Sample B represents an authentic broadcast recording with natural acoustic, behavioural, and environmental characteristics.

2. Sample A demonstrates multiple indicators of artificial or heavily processed production, including:

-Uniform dynamic leveling

-Extended frequency spectrum

-Robotic vocal patterns

-Absence of natural speech artifacts

-Controlled facial synchronisation

3. The technical features of Sample A are consistent with:

-AI-generated voice dubbing,

or

-Heavily engineered post-production narration.

4. There is no technical evidence to support that Sample A is an official or original broadcast translation.

Sample A is highly likely to be an artificially generated or digitally reconstructed version of the original speech and does not represent an authentic broadcast source.

Sample B maintains characteristics consistent with real-time, unprocessed public address recordings.

The original broadcast was delivered in Persian, and a translation of the original footage confirmed that Pakistan was not mentioned. Moreover, multiple AI-based detection tools gave the video high ratings of being AI-generated. Finally, Soch Fact Check’s audio-visual forensic analysis shows strong indicators of AI-assisted manipulation in the viral clip, including synthetic voice-dubbing and digitally reconstructed visuals.

While the exact method used cannot be independently verified, the available evidence confirms that the video is not authentic.

Virality

The claim was shared here, here, and here on Facebook. Archived here, here, and here.

On X, it was shared here, here, here, and here. Archived here, here, here, and here.

Conclusion: The claim that Iran’s Foreign Ministry spokesperson Esmail Baghaei praised Pakistan for condemning external interference amid the recent protests is false. The viral clip was likely manipulated using AI.

–

Background image in cover photo:

To appeal against our fact-check, please send an email to appeals@sochfactcheck.com